Coming up with good abstractions is a hard problem as you need to have a deep understanding of both your subject matter and what your abstraction will be used for. It’s a problem we obsess over at Duffel as we want to build APIs people love to use.

However, abstractions are often like a box of chocolates, you never quite know what you're going to get. The functions you thought you understood can always be hiding an unwanted behaviour you've missed.

We came across an example of this recently when doing a thorough performance investigation of a code path. We found a peculiar performance issue when we made calls to Unleash, a service where we store feature flags. We use those flags to quickly and easily turn features on and off in our live system. We run an Unleash server on our network, and the instances of our app connect to that server to read feature flags.

This post covers some complex Elixir concepts. We suggest exploring the linked resources and extra reading if there are any details that are new to you.

Spotting the problem

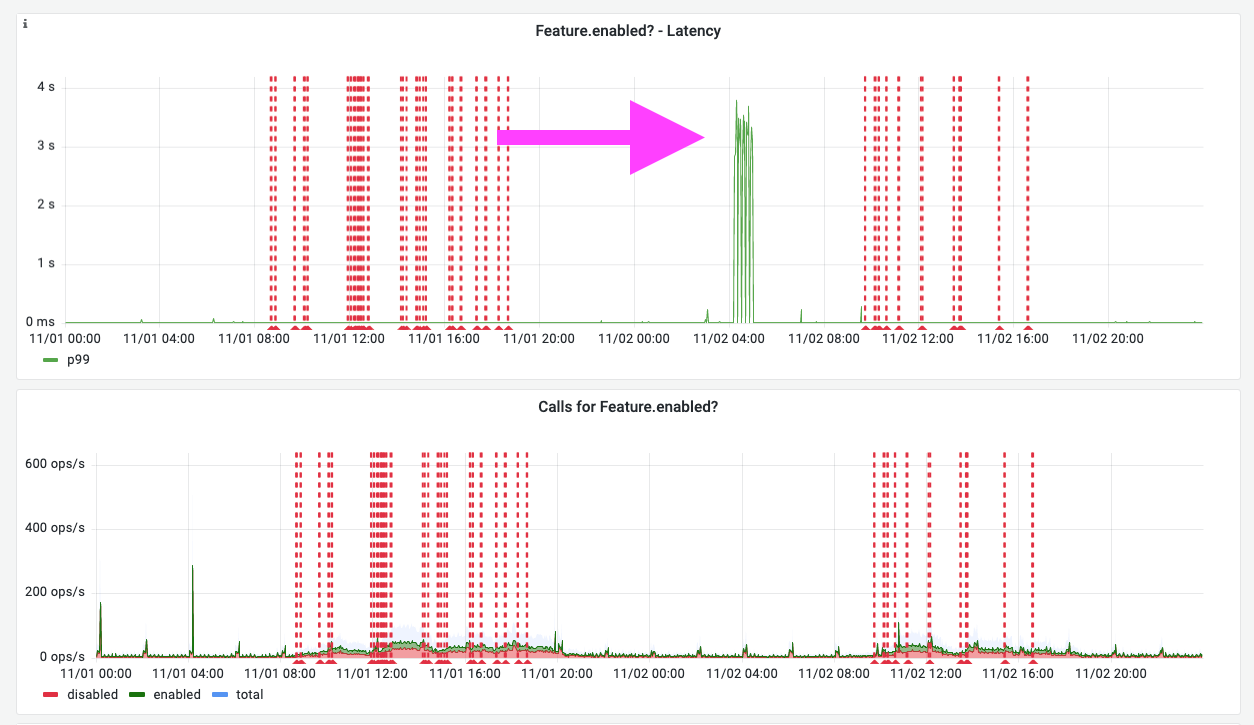

Since the issue was on the critical path of a lot of our requests we knew we had to prioritise a fix. For example, we often need to check if a customer has access to a loyalty program before returning the corresponding ticket offers. We wanted to gain insight into the latencies we observed when fetching feature flag values. We added a Prometheus metric to track those latencies and this yielded the following graph in Grafana:

As you can see on the graphs, sometimes reading a feature flag was taking much longer than we expected. We managed to correlate those spikes with times when our infrastructure was under significant load.

As it turns out the third party client we are using to query the service had fallen into a pitfall of a key abstraction used throughout Elixir codebases: GenServers.

Elixir's abstraction for keeping state: GenServers

Variables in Elixir are immutable, which allows developers to avoid a whole set of common programming bugs (e.g concurrent access to a variable). When faced with the problem of storing and accessing state, one of the tools in an Elixir developer's toolbox is the GenServer.

A GenServer is an abstraction for the server part of classic client-server interaction. At its core, it exposes a set of callbacks to implement that will trigger when receiving a message in the Process's mailbox. For example, when someone sends a message to our process via GenServer.call/2 or GenServer.cast/2, this will trigger a synchronous or asynchronous call to the server respectively.

In the case of handling an asynchronous call, the function one must implement in the GenServer is handle_cast/2, whose signature is as follows:

handle_cast(request, state)In the handle_cast function we receive the state argument, which is the local state that our GenServer manages. We can perform any required operations and return our modified state, which will be passed to handle_cast when it is next triggered.

For the sake of completeness, here is a simple example of what a GenServer implementing a stack looks like. If you wish to dive deeper into what it looks like the documentation is here.

defmodule Stack do

use GenServer

# Callbacks

@impl true

def init(stack) do

{:ok, stack}

end

@impl true

def handle_call(:pop, _from, [head | tail]) do

{:reply, head, tail}

end

@impl true

def handle_cast({:push, element}, state) do

{:noreply, [element | state]}

end

endAnother advantage of using GenServers is that we overcome the problem of using a single global process. Since the GenServer runs in its own VM Process, a crash will not bring down your whole application.

The Unleash client

Feature flags stored in Unleash do not change often and network calls are expensive. To save on these network calls, the client library we use to interact with the Unleash server uses GenServer state to cache the feature flag values.

Here's a great cooking metaphor to better understand the relative speeds of data access in software:

Imagine you are preparing a stir fry and must chop up all your ingredients. You can collect your ingredients and leave them on the chopping board before starting so they are ready when you need them. This is like fetching data from RAM. Alternatively, you can collect each ingredient from the fridge when you need it. This is like fetching data from the disk. The last option is to go to the supermarket to buy the ingredients when you need them. This is like doing a network call to fetch data.

The Unleash client library periodically refreshes the stored values by fetching all flags in the Unleash instance, updating the cache stored in the GenServer's state – collecting the ingredients before they’re needed. Someone asking for a feature flag's value will read it from the GenServer's state rather than triggering an expensive network call.

The solution

An easily-missed implication of using GenServers is that handle_call/2 will be called sequentially as messages arrive. In most cases, this would be the intended behaviour since it prevents race conditions when two operations compete with each other.

However, in the case of this Unleash client library, it means that multiple threads trying to get the GenServer's cached feature flag values will hit a bottleneck since the read operations will be executed sequentially. This means that when the system is under load, we will take longer to respond to requests and default to treating some features as unavailable.

The fix for this problem is to rely on an alternative abstraction that gives us more control over how our code accesses the stored data: ETS tables. Putting data in an ETS table allows us to specify that we'd like to allow concurrent reads, meaning that multiple threads can read the stored value at the same time. Doing this gets rid of the cache access bottleneck, thereby solving the performance issue we observed; instead of forming a queue, multiple processes can read feature flags at the same time.

Once the problem was identified, the fix was straightforward so we implemented it and opened a PR in the open source library to share the fix. We're grateful to the maintainer for promptly merging the fix and open sourcing this library in the first place.

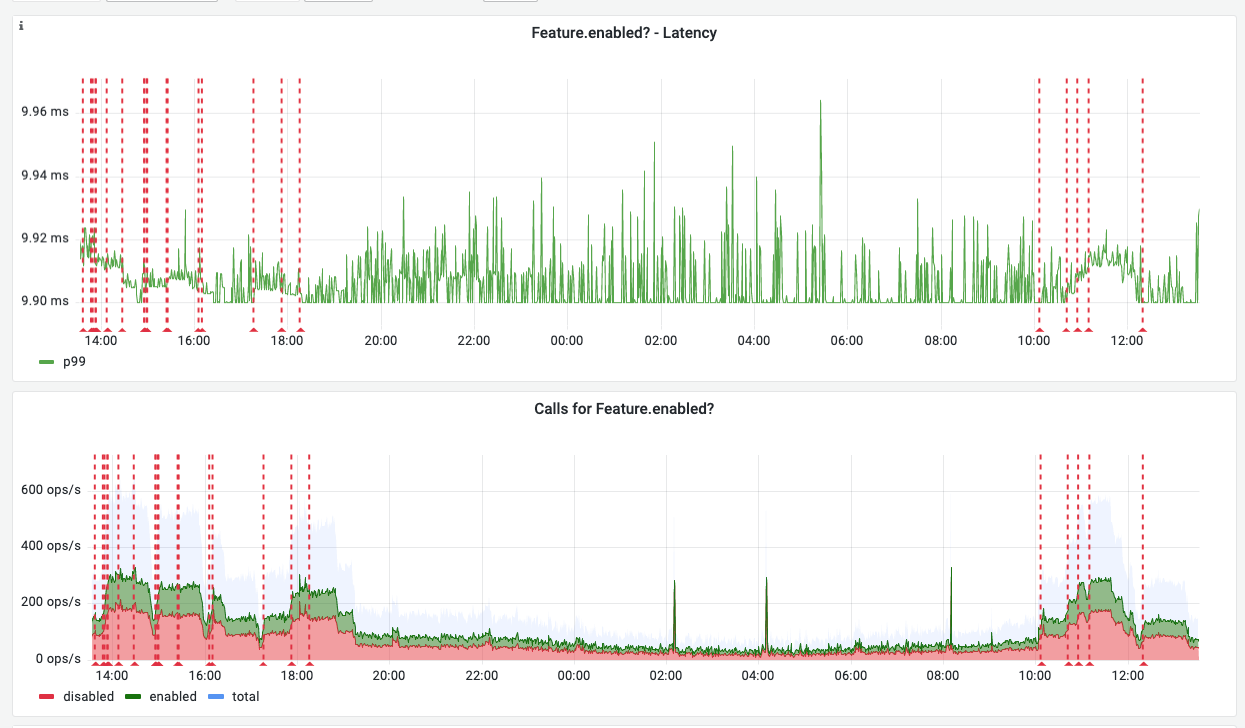

We soon observed that the latency spikes disappeared as shown in the following graph:

When to use GenServers and when not to

GenServers are a good fit for when you need to keep track of some states with sequential reads and writes. Be mindful of the fact that the function calls will trigger sequentially as messages arrive in the process’s mailbox. Our fix for the Unleash library changed the way we read the cached values to allow for concurrent reads while keeping the GenServer to periodically poll the Unleash service and manage the ETS table’s state.

The issue we solved illustrates why building abstractions is hard: by hiding implementation details users might come across behaviour they did not expect. You might assume that a GenServer allows concurrent calls, but in fact, messages are processed sequentially. If naming is the hardest problem in computer science then building good abstractions is probably a close second. Deep knowledge of the libraries and the frameworks we use is crucial in delivering good software.

If you are passionate about writing great abstractions you should join us at Duffel!

Latest posts

Rippling chooses Duffel to power Rippling Travel

Data-Driven Travel: Using Analytics from the Duffel API to Make Smarter Business Decisions

Our latest blog post explores how companies and developers can use this tool to make smarter business decisions. Learn how to leverage booking data, implement dynamic pricing models, and use predictive modeling for future planning.

How to Take Flight: Accessing Airline Booking Capabilities to Sell Tickets

Selling flights can level-up your customer offerings... but it's complex! Our latest blog simplifies the process.

Rippling chooses Duffel to power Rippling Travel

API

Data-Driven Travel: Using Analytics from the Duffel API to Make Smarter Business Decisions

Our latest blog post explores how companies and developers can use this tool to make smarter business decisions. Learn how to leverage booking data, implement dynamic pricing models, and use predictive modeling for future planning.

How to Take Flight: Accessing Airline Booking Capabilities to Sell Tickets

Selling flights can level-up your customer offerings... but it's complex! Our latest blog simplifies the process.